OSD 285: Benjamin, get the musket

Degauss the police?

The cofounder of Aerodome, a drone-as-first-responder company, tweeted this the other day:

(For context, Flock makes license plate readers and camera systems for police agencies and private property owners, especially HOAs and large property managers.)

Someone responded to Rahul’s tweet to say they’ve been excited about the possibility of this collab:

The screenshots in that tweet are examples of criminals that widely deployed cameras and first-responder drones would have helped catch:

On the one hand, the obviously correct response is:

People should be shooting these things out of the sky on sight, and OSD should be funding a bounty for every quadcopter’s head that you bring us.

We even wrote a whole edition of the newsletter about this back in April:

OSD 269: The social credit system has missiles now

Guns are about individual power. Every disagreement about gun ownership comes down to who’s allowed to be unilaterally powerful. That’s what we focus on around here.

But on the other hand … is that actually right? What’s the principle there? That police shouldn’t have cutting-edge technology? There was a time when radios were cutting-edge technology. Then it was cars. Then it was computers. If you need the cops, you’d want them to have all of those things.

Maybe the principle is that police shouldn’t have technology that can be abused. But the trouble with that is that every technology can be abused. Cameras are a zeitgeisty example, but hell, there’s a piece of tech we talk about every week that’s easy to abuse — a gun. “The police, but only equipped with tech they can’t abuse” is the same as saying “the police, but equipped with no tech at all”.

It helps to think about what the goal is. Everyone from Lysander Spooner to David D. Friedman to J. Edgar Hoover agrees that wherever law comes from and whoever is doing the job of enforcing those laws, you want the law enforcers to be highly competent. Competence requires training, equipment, and well-run internal systems.

No great company would see some tech that’s going to make it much better at its job and say, “No, let’s not buy it, it would speed us up too much.” So if a police force is going to be well-run, they shouldn’t say that either.

But a company can’t shoot you or take away your rights. A police force can.

So let’s add one more goal: the law enforcers should highly competent at safeguarding your rights, and extremely incompetent at taking them away. But that’s just a question of intent, right? A cop’s tools don’t care whether he’s showing up to take away your attacker or take away your guns. How do you arm the police with awesome tech to do the former without making it easier for them to do the latter?

The answer is actually central to gun rights: the way to solve problems with a new technology is to make more new technology. From “OSD 246: Eroom’s law”, quoting Steven Sinofsky:

the [executive order on AI] is from a culture that wants to regulate away tech problems instead of allowing people to innovate them away. “The best, enduring, and most thoughtful [scifi] writers who most eloquently expressed the fragility and risks of technology also saw technology as the answer to forward progress. They did not seek to pre-regulate the problems but to innovate our way out of problems. In all cases, we would not have gotten to the problems on display without the optimism of innovation.“

So a self-regulating system for modern police tech is something you’ve seen on gun forums forever: the police can have whatever they want, as long as I can have it too.

That doesn’t solve every implication, but it’s a fine starting point to reason from.

This week’s links

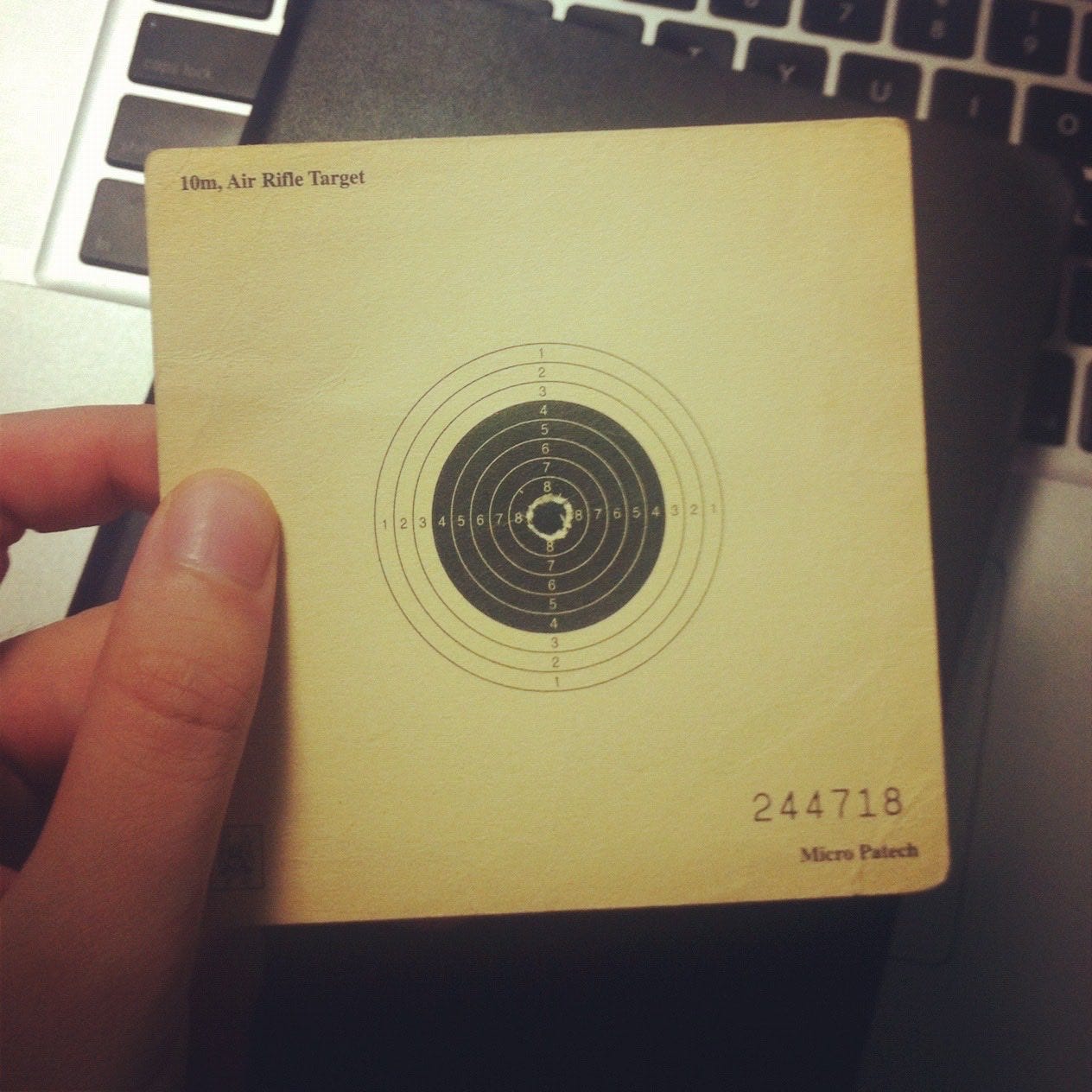

Thread on Olympic target shooting glasses, from a former member of Singapore’s national air rifle team

Here’s what the 10-meter target looks like after they’ve fired 100 shots at it:

“An App Called Napster”

On the topic of not being able to fight what technology unleashes. h/t Discord subscriber @kaldor-hicks.

About Open Source Defense

Merch

Grab a t-shirt or a sticker and rep OSD.

OSD Discord server

If you like this newsletter and want to talk live with the people behind it, join the Discord server. The OSD team is there along with lots of readers.

Commenting while reading, but

> The cofounder of Aerodome, a drone-as-first-responder company, tweeted this the other day:

This tweet is one of the stupidest tweets I have ever read, because it assumes a technological solution to a social problem.

Crime happens in San Francisco because the government of San Francisco does not want to stop it. Making it easier to stop, will not change San Francisco's unwillingness to stop it. San Francisco's criminality is not a result of insufficient resources. It is intentional. If nothing else, we saw that when Xi came to town.

They literally can stop crime tomorrow if they want to. They do not do this. Why would drones make them change their minds?